System Logs 101: Ultimate Power Guide for IT Pros

Ever wondered what secrets your computer is quietly recording? System logs hold the answers—silent witnesses to every crash, login, and error. Let’s dive into the powerful world of system logs and unlock their full potential.

What Are System Logs and Why They Matter

System logs are detailed records generated by operating systems, applications, and network devices that document events, errors, warnings, and user activities. These logs serve as the digital footprint of a system’s behavior, offering invaluable insights for troubleshooting, security monitoring, and performance optimization. Without system logs, diagnosing issues would be like navigating a maze blindfolded.

The Core Purpose of System Logs

At their heart, system logs exist to provide visibility. They capture what happens behind the scenes—when a service starts, when a user logs in, or when a process fails. This visibility is critical for maintaining system health and security.

- Enable real-time monitoring of system performance

- Support forensic analysis during security breaches

- Facilitate compliance with regulatory standards like GDPR or HIPAA

“If it didn’t happen in the logs, it didn’t happen.” — Common saying among system administrators.

Types of Events Captured in System Logs

Different systems log different types of events, but most fall into standard categories. Understanding these helps in filtering and analyzing logs effectively.

- Error messages: Indicate failures, such as a service crash or failed login attempt.

- Warnings: Signal potential issues before they become critical, like low disk space.

- Informational entries: Confirm normal operations, like successful service startups.

- Debug messages: Provide detailed technical data for developers during troubleshooting.

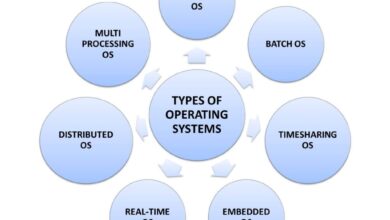

How System Logs Work Across Different Platforms

While the concept of logging is universal, implementation varies significantly across operating systems and environments. From Windows to Linux to cloud platforms, each has its own logging architecture, format, and tools.

Windows Event Logs: Structure and Access

Windows uses the Event Log service to record system, security, and application events. These logs are stored in binary format and accessed via the Event Viewer tool or PowerShell commands.

- Three main log channels: Application, Security, and System

- Each event includes metadata: timestamp, event ID, source, level (error, warning, info), and user context

- Advanced filtering allows admins to search for specific patterns, such as repeated login failures

For deeper analysis, Microsoft provides Windows Event Log documentation, detailing schema and API access.

Linux Syslog and Journalctl: The Backbone of Unix Logging

Linux systems traditionally rely on the syslog protocol, which routes messages from various services to log files in /var/log. Modern distributions use systemd-journald, which enhances logging with structured data and binary storage.

- Key log files include

/var/log/messages,/var/log/auth.log, and/var/log/kern.log journalctlcommand allows real-time querying:journalctl -u ssh.serviceshows SSH service logs- Logs can be filtered by time, priority, and service using powerful command-line syntax

For administrators, mastering rsyslog configuration is essential for scaling log management in enterprise environments.

Cloud and Containerized Environments: Logging at Scale

In cloud-native architectures, traditional file-based logging is often replaced by centralized, streaming models. Containers, microservices, and serverless functions generate logs that must be aggregated in real time.

- Kubernetes uses

kubectl logsto retrieve pod logs, but production setups require tools like Fluentd or Loki - AWS CloudWatch and Google Cloud Logging offer managed solutions for collecting and analyzing logs

- Serverless platforms like AWS Lambda automatically stream logs to CloudWatch Logs

As systems become more distributed, the challenge shifts from generating logs to managing their volume and velocity.

The Critical Role of System Logs in Cybersecurity

System logs are not just for troubleshooting—they are a frontline defense in cybersecurity. Every unauthorized access attempt, privilege escalation, or malware execution often leaves a trace in the logs.

Detecting Intrusions Through Log Analysis

Security Information and Event Management (SIEM) systems like Splunk, IBM QRadar, and Elastic Security ingest system logs to detect anomalies. By correlating events across multiple sources, they can identify attack patterns.

- Sudden spike in failed login attempts may indicate a brute-force attack

- Unexpected process execution from temporary directories can signal malware

- Logs showing lateral movement within a network help trace attacker paths

“The difference between a breach and a disaster is how quickly you detect it—logs are your eyes.” — Cybersecurity expert

Compliance and Audit Requirements for System Logs

Regulatory frameworks such as PCI-DSS, HIPAA, and SOX mandate the collection, retention, and protection of system logs. Organizations must prove they are monitoring access and detecting threats.

- PCI-DSS requires logging of all access to cardholder data

- HIPAA demands audit trails for any access to protected health information

- Logs must be protected from tampering—often achieved through write-once storage or cryptographic hashing

Failure to maintain proper system logs can result in fines, legal liability, and loss of certification.

Log Integrity and Anti-Tampering Measures

Attackers often attempt to erase or alter logs to cover their tracks. Ensuring log integrity is therefore a critical security practice.

- Centralized log servers prevent local deletion by compromised hosts

- Using TLS to encrypt log transmission prevents interception and modification

- Implementing log signing or blockchain-based immutability adds another layer of trust

Tools like OSSEC and Wazuh include file integrity monitoring to detect unauthorized changes to log files.

Best Practices for Managing System Logs

Effective log management goes beyond just collecting data. It involves strategy, tooling, and ongoing maintenance to ensure logs remain useful and accessible.

Centralized Logging: Why You Need a Log Aggregation System

As organizations grow, logs are scattered across servers, applications, and cloud services. Centralizing them into a single platform improves visibility and efficiency.

- Reduces time spent logging into individual machines

- Enables cross-system correlation for faster root cause analysis

- Supports automated alerting based on predefined thresholds

Solutions like the ELK Stack (Elasticsearch, Logstash, Kibana) and Graylog are popular open-source choices. For enterprise needs, Datadog and Sumo Logic offer robust cloud-based alternatives.

Log Rotation and Retention Policies

Logs can consume massive amounts of disk space. Without proper rotation, systems can run out of storage, leading to service outages or lost data.

- Use tools like

logrotateon Linux to compress and archive old logs - Define retention periods based on compliance needs (e.g., 90 days for general ops, 1 year for financial systems)

- Delete or archive logs securely to prevent data leaks

Automated scripts should monitor log directory sizes and trigger alerts when thresholds are exceeded.

Standardizing Log Formats for Easier Analysis

Inconsistent log formats make parsing and analysis difficult. Adopting a standard format like JSON or using structured logging libraries improves machine readability.

- Use key-value pairs:

{"timestamp": "2025-04-05T10:00:00Z", "level": "ERROR", "message": "Login failed"} - Include context: user ID, IP address, session ID, and service name

- Leverage logging frameworks like Log4j, Serilog, or Winston that support structured output

Structured logs integrate seamlessly with SIEMs and analytics platforms, reducing parsing errors and improving search performance.

Tools and Technologies for Analyzing System Logs

The right tools can transform raw system logs into actionable insights. From open-source utilities to enterprise platforms, the market offers solutions for every need and budget.

Open-Source Log Management Tools

For teams with technical expertise and budget constraints, open-source tools provide powerful capabilities without licensing fees.

- ELK Stack: Elasticsearch stores logs, Logstash processes them, and Kibana visualizes data. Highly customizable but requires significant setup.

- Graylog: Offers a user-friendly interface with built-in alerting and dashboards. Easier to deploy than ELK for beginners.

- Loki: Developed by Grafana Labs, Loki indexes logs by labels rather than full text, making it lightweight and fast.

These tools are ideal for DevOps teams managing complex infrastructures.

Commercial and Cloud-Based Log Analytics Platforms

Enterprises often prefer managed services that reduce operational overhead and offer scalability and support.

- Datadog: Combines log management with monitoring, APM, and security features in a unified platform.

- Splunk: Industry leader in log analysis, known for its powerful search processing language (SPL).

- Azure Monitor: Integrates seamlessly with Microsoft environments and supports hybrid cloud setups.

These platforms typically offer advanced features like machine learning-based anomaly detection and compliance reporting.

Real-Time Monitoring and Alerting Systems

Waiting for a system to fail before checking logs is not proactive. Real-time monitoring turns logs into early warning systems.

- Set up alerts for critical events: disk full, service down, multiple failed logins

- Use tools like Prometheus + Alertmanager or Zabbix to trigger notifications via email, Slack, or PagerDuty

- Define escalation policies to ensure alerts reach the right person

Effective alerting reduces mean time to detection (MTTD) and mean time to resolution (MTTR), improving overall system reliability.

Common Challenges in System Logs Management

Despite their value, managing system logs comes with significant challenges. From data overload to skill gaps, organizations must navigate several obstacles to get the most out of their logs.

Log Volume and Noise: Separating Signal from Noise

Modern systems generate terabytes of logs daily. Much of this data is routine and non-actionable, making it hard to spot real issues.

- Implement log filtering to suppress low-priority messages (e.g., debug logs in production)

- Use AI-powered tools to detect anomalies and surface only relevant events

- Apply tagging and categorization to prioritize critical logs

Without filtering, teams risk alert fatigue, where important warnings are ignored due to excessive noise.

Performance Impact of Excessive Logging

While logging is essential, too much of it can degrade system performance. Writing logs consumes CPU, memory, and I/O resources.

- Avoid logging at the DEBUG level in production environments

- Batch log writes instead of writing every event immediately

- Use asynchronous logging to prevent application blocking

Profiling tools can help measure the performance cost of logging and optimize accordingly.

Skill Gaps and Lack of Standardization

Many organizations lack staff trained in log analysis. Additionally, inconsistent logging practices across teams make correlation difficult.

- Invest in training for system administrators and security analysts

- Adopt company-wide logging standards and templates

- Document common log patterns and their meanings in a knowledge base

Standardization ensures that logs are not only collected but also understood and acted upon.

Future Trends in System Logs and Log Management

The world of system logs is evolving rapidly. As technology advances, so do the methods and expectations for logging and analysis.

AI and Machine Learning in Log Analysis

Artificial intelligence is transforming log management by enabling predictive analytics and automated root cause identification.

- ML models can learn normal behavior and flag deviations without predefined rules

- Natural language processing (NLP) helps extract meaning from unstructured log messages

- Auto-correlation engines link related events across systems to reconstruct incident timelines

Platforms like Google’s Chronicle and Microsoft Sentinel are already integrating AI to enhance threat detection.

Serverless and Edge Computing: New Logging Frontiers

As computing moves to the edge and serverless functions, traditional logging models face new challenges.

- Serverless functions have short lifespans, making log capture time-sensitive

- Edge devices may have limited storage and connectivity, requiring selective logging

- Federated logging architectures are emerging to handle decentralized data

Future tools will need to be lightweight, adaptive, and capable of operating in low-resource environments.

The Rise of Observability and Unified Telemetry

Logging is no longer standalone. It’s part of a broader observability strategy that includes metrics, traces, and user feedback.

- OpenTelemetry is becoming the standard for collecting logs, metrics, and traces in a unified format

- Observability platforms like New Relic and Dynatrace provide end-to-end visibility across the stack

- Correlating logs with distributed traces helps pinpoint performance bottlenecks in microservices

The future belongs to integrated systems where logs are just one piece of a holistic monitoring puzzle.

How to Get Started with System Logs Today

You don’t need a million-dollar SIEM to benefit from system logs. Start small, build good habits, and scale as needed.

Assess Your Current Logging Setup

Begin by auditing what logs you already have. Are they being collected? Are they secure? Are they accessible?

- Inventory all systems and identify their default log locations

- Check log retention policies and rotation settings

- Verify that critical services (like firewalls and authentication) are logging properly

Define Logging Goals and Requirements

Ask: What do you want to achieve with your logs? Is it security, compliance, performance, or troubleshooting?

- Map logging needs to business objectives

- Prioritize systems based on risk and criticality

- Establish KPIs, such as log coverage percentage or mean time to detect incidents

Implement a Simple Logging Pipeline

Start with a basic centralized logging setup. For example:

- Use

rsyslogto forward Linux logs to a central server - Install Filebeat to ship logs to Elasticsearch

- Create a Kibana dashboard to visualize key metrics

Once operational, iterate by adding alerts, retention policies, and user training.

What are system logs used for?

System logs are used for monitoring system health, diagnosing errors, detecting security threats, ensuring compliance with regulations, and auditing user activities. They provide a chronological record of events that helps IT teams maintain and secure their environments.

How long should system logs be kept?

Retention periods depend on industry regulations and organizational policies. General IT operations may keep logs for 30–90 days, while regulated industries like finance or healthcare may retain them for 1–7 years. Always align retention with compliance requirements like GDPR or HIPAA.

Can system logs be faked or tampered with?

Yes, attackers can alter or delete local logs to hide their activities. To prevent tampering, logs should be sent to a secure, centralized server over encrypted channels and stored in write-once media. Implementing log integrity checks and using tools like Wazuh enhances protection.

What is the difference between logs and events?

An event is a single occurrence in a system (e.g., a user login), while a log is the recorded entry that documents that event. Logs collect multiple events over time and provide context such as timestamps, severity levels, and source information.

Which tools are best for analyzing system logs?

Popular tools include Splunk, ELK Stack (Elasticsearch, Logstash, Kibana), Graylog, Datadog, and Loki. The best choice depends on your environment size, budget, and technical expertise. Open-source tools offer flexibility, while commercial platforms provide ease of use and support.

System logs are far more than just technical records—they are the heartbeat of your IT infrastructure. From diagnosing crashes to stopping cyberattacks, they provide the visibility needed to keep systems running smoothly and securely. By understanding how they work, adopting best practices, and using the right tools, organizations can turn raw log data into powerful insights. Whether you’re a beginner or a seasoned pro, the journey into system logs starts with a single line of text. Start logging wisely, analyze proactively, and stay ahead of the curve.

Further Reading: